Introduction

Overview

This document describes all the technical procedures to implement IDP MDM.

Purpose and Scope

This document describes details to configure and use IDP integration layer to implement MDM using pre-defined templates for ingesting various source data, extracting mastered data out of Reltio, enabling DCR and Real Time Integration.

Targeted Audience

The targeted audience for this document is Delivery and Support teams.

Onboarding Details

|

SL No |

Request |

Instructions Link |

Details |

|---|---|---|---|

|

1 |

New Tenant Request MDM Release Calendar MDM Support Model |

This page provides you information on tenant provisioning, OA release calendar, Support Model and communication. |

|

|

2 |

Tenant upgrade Request |

Client Tenants are automatically upgraded as per the Release Calendar |

No explicit request needs to be submitted by delivery. |

|

3 |

Additional Prod Ops Request |

This page provides you information on how to request for upgrading the warehouse size, how to enable DCR functionality, and any adhoc request to Prod Ops. |

Setting up Reltio

Check MDM Reltio Setup to apply data-model changes in Reltio.

MDM 2.0 Key features

MDM 2.0 uses OA platform to support the below functionalities.

Inbound to Reltio

-

Ingest any source data in generic import format into Reltio.

-

Ingest OneKey US data into Reltio.

-

Ingest OneKey global data into Reltio.

-

Ingest MIDAS data into Reltio.

-

Ingest Xponent data into Reltio.

-

Ingest DDD data into Reltio.

-

Ingest DDDMD data into Reltio.

-

Ingest lookup codes from any source in a pre-defined format into RDM tenant.

-

Ingest look up codes to RDM tenant when Reltio Data Tenant is used for OneKey US and Global data subscription.

-

Capability to ingest any source data in any format into Reltio.

-

Perform lookup code validation before loading any source data into Reltio.

-

Extract any rejects from lookup code validation.

Outbound from Reltio

-

Extract data from Reltio for downstream system.

Real Time Integration and Data Change Request

-

Real time integration of data changes in MDM with Lexi to send to external system through streaming.

-

Ingest OCE DCRs and External DCRs into Reltio.

-

Configuration to auto-approve DCRs in IDP.

-

DCR status updates to Lexi to keep the source systems aligned with DCR status through streaming.

-

Consume OCE subscription flag from Lexi into Reltio.

-

Consume crosswalk updates from OCE into Reltio.

-

Distributed tracing to monitor DCR flow and troubleshoot any failures.

-

Ex-US scenarios in DCRs like Create Entity and Update Entity, Create and Update Relations.

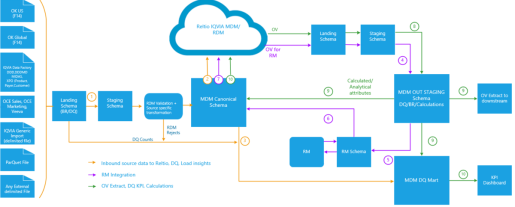

IDP-MDM end-to-end Data flow

*RM Integration and KPI - planned for future releases in the above diagram.

Overview of Data Flow

All the Source files provided by clients is placed in a dedicated s3 location (OKUS_F14, OKGBL_F14, Midas, DDD, XPO etc.), then data present in the source files are loaded to specific landing tables. From landing tables, this is loaded to specific staging tables. And from Staging tables, data is transformed based on the transformation rules and loaded to canonical tables. After that Data present in canonical tables is posted to Reltio tenant.

Reltio OV records are exported using outbound plugin which is called in IDP data pipelines and then the data is pushed into S3 bucket, landing and staging tables. From staging, OV JSON is being flattened out into views. The Customer and product Data present in Views is extracted to flat files separately.

If RDM Validation is enabled for any source then RDM Business Rule plug-in picks data from staging and validates the code field defined in the JSON mapping configuration against RDM_Source_Mapping table (MV) and writes the status for each records in RDM_VALIDATION table. If the code fields present in the data file finds the code the RDM_Source_Mapping table,then data is loaded in Canonical table without any error.

Inbound/Outbound Reltio

Inbound

The MDM import process allows to load data from a source (example, S3 bucket) into Reltio. All the source data is persisted in the snowflake canonical schema. Form the canonical schema data is loaded into Reltio. MDM data model can be found in MDM Data model. During the data import process the source code values are standardized based on the codes loaded by the Generic Code Import pipeline. The default configuration from the source to the canonical schema is specified in the Data Interface Documents. The mapping from the canonical schema to Reltio is driven by the Plugin Views Creation, based on the views. Inbound plugin connector is responsible for pushing the data into Reltio.

The processes needed to set up data import process are as follow:

Generic Import

-

Generic Code Import, see Generic Import Code Load.

-

Generic Import Format (GIF) - Codes and Data Load, see Generic Import Format (GIF) - Codes and Data Load

Onekey

-

Onekey US data load, the US pipeline template enhanced to support loading additional countries under US region, see Onekey US Data Load from F14.

-

Onekey Global Data load, see Onekey GBL Data Load from F14.

-

Oneket US Code load, see OneKey US Code Import from DT.

-

Oneket Global Code load, see OneKey GBL Code Import from DT.

-

Canonical HCP and HCO table duplicate entries are fixed.

MIDAS Data import, see MIDAS Data Load.

XPO Data import, see Xponent Data Load.

DDD Data import, see DDD Data Load.

DDDMD Data import, see DDDMD Data Load.

Outbound

Reltio OV records are exported using outbound plugin being called in IDP data pipelines and then the data is pushed into staging area. from staging, OV JSON is being flattened out into views with easy SQL. Extract layout mapping documents can be found under Data Interface Documents.

-

New prerequisite is added for Pipeline Reltio_MDM_Extract. follow the instructions, see MDM Reltio Extract.

-

MDM outbound views, see MDM CM Extract Outbound Views.

-

All the MDM data is being exported from Reltio into dedicated S3 location then staged into snowflake tables using Outbound Plug-in.

-

Only Customer Data from staging tables are flattened into easy SQL views as per the interface defined, see DID of Customer MDM Outbound Extract in Data Interface Documents.

-

Only Product Data from staging tables are flattened into easy SQL views as per the interface defined, see DID of Product MDM Outbound Extract in Data Interface Documents.

DCR

Users such as customer sales representatives who do not have rights to update customer data objects can suggest changes via a process called Data Change Request (DCR). IDP platform takes up these requests from OCE sales and external systems are being processed and posted to Reltio MDM using IDP pipeline. MDM routes them into proper Internal/External/Auto-Approved queues and sends back the verification status to IDP, Lexi reads these status for each objects and syncs with OCE system.

IDP DCR component capabilities:

-

Loads OCE DCRs into Reltio.

-

Loads External DCRs from Lexi into Reltio.

-

Updates the OCE\External DCR status (DCR creation/validation) using Lexi thru IDP streaming.

-

DCR routing and split support on real time.

-

Submit and Trace DCR to Onekey.

For more information on how to load OCE DCRs into Reltio, see.

For more information on how to update the DCR status in OCE using streaming through Lexi, see Real Time Streaming.

Real Time Streaming

MDM streaming allows to consume Reltio messages from AWS SQS queue, enrich them and publish them on Anypoint MQ. There are two types of stream flows:

-

DCR Flow: Reads dcr messages from Amazon SQS DCR queue and store into Event table and then publish them to Anypoint queue. There are two SCDF stream (dcrStore and dcrEnrichAnypoint).

-

Customer/Entities Flow: Reads entities/relations/merge messages from Amazon SQS CUS queue and then publish them to Anypoint queue. There are two SCDF stream (v2streamStore and v2streamEnrichAnypoint).

Dependencies

-

Lexi

Anypoint queue name, user id and secret code

-

AWS SQS

OA tenant request form requires to specify "Reltio Tenant ID" = <Reltio tenant ID, example. xXPsWCYuJQkIcVw>acing Enabled

-

IDP API credentials

Near real time streaming from Reltio to Lexi. Streaming can send create/update/soft-deletes events for any entity and relation of any domain. Streamed events flow from Reltio, into a SQS, then are persisted into a snowflake table, to later be sent to an Anypoint queue.

For more information about streaming, see Real Time Streaming.