LEXI_IDP_Consent_Common_Schema_Import_Baseline

You can run this pipeline to create common schema objects that are required for any LEXI-IDP pipeline. This pipeline can be used by all clients in an IDP environment, meaning you don't need to create a separate pipeline for each client.

-

You can find pipeline template file named LEXI_IDP_Consent_Common_Schema_Import_Baseline_{{BuildNumber}}.json under /templates/product/LexiTemplates folder in your s3 bucket for your IDP environment. Download this file.

-

Open the LEXI_IDP_Consent_Common_Schema_Baseline_{{BuildNumber}}.Json file and replace all instances of the following tags with the appropriate values.

Parameters

Pipeline Parameter

Tag

Description

Example

staging_schema

<STAGING_SCHEMA_VALUE>

Staging schema name along with database name

format: {{DatabaseName}}.{{staging schema}}

IDP_IQVIALEXIDEV_OA_DEV_SANDBOX_ENV_DWH.ODP_CORE_STAGING

dbConnection

<DATABASE_CONNECTION_NAME>

default database connection name for IDP platform

database-default

targetConnectionName

<DATABASE_CONNECTION_NAME>

default database connection name for IDP platform

database-default

job_name

<JOB_NAME_VALUE>

This is fixed value for Job name

LEXI_CONSENT_COMMON_SCHEMA

client_name

<CLIENT_NAME_VALUE>

This is used to identify the jobs based on client name in table name prefix and lexi_idp_config table data query

CNSTCLIENT

is_cls_refresh

n/a

This parameter decides whether to CLS Data to Refresh or not from LEXI DB to Snowflake DB by using CLS API.

1

continue_cls_on_error

n/a

This parameter decides whether to continue or exit the process if any errors while downloading all CLS codes from LEXI by using CLS API.

0 if there is no CLS records in CLS_CODE_LKP table then the pipeline process will exit as it can't continue without CLS codes. if there is already CLS but still fails to refresh the CLS codes then this parameter will decide.

cls_export_endpoint

n/a

This parameter is used to configure end point to which CLS Export api will be called.

clsExport

idl_date

n/a

This is used for IDL case when first data loaded to set initial Date and time to user to fetch data from source system.

'1901-01-01T00:00:00' This is used only when no record for correspond client and tenant, country, system and without any success records in LEXI_INTAKE_JOB table

system_code

n/a

This is fixed system code of source system.

CONSENT

entity_name

n/a

This holds Entity Name

CONSENT

s3 python script path

<S3_PYTHON_SCRIPT_PATH>

This holds the python scripts path location in which python scripts are placed for Consent

root/Tools/lexi_python_scripts

s3_connection

<S3_CONNECTOR>

It's same as s3Connection parameter

s3_connector

database_name

<DATABASE_NAME>

This is Snowflake Database name

IDP_LEXIDEV_OMCH_USV_IDP01_ENV1_DWH

ocep_connection1

<SFDC_CONNECTION_NAME>

This holds the sfdc connection name used in LEXI_OCEP_Consent_Export_Baseline pipeline

sfdcsales_exp

oced_connection1

<SFMC_CONNECTION_NAME>

This holds the sfmc connection name used in LEXI_OCED_Consent_Import_Baseline pipeline.

sfdcsales_imp

imp_type

n/a

This holds the type used for import pipeline

imp

exp_type

n/a

This holds the type used for export pipeline

exp

ocep_system_code

n/a

This holds the system code value used in OCEP system.

SFDCSALES

oced_system_code

n/a

This holds the system code value used in OCED system.

SFDCMARK

CORE_LOG_SCHEMA

<CORE_LOG_SCHEMA>

core log schema name without DB name prefix

"ODP_CORE_LOG"

ocep_connection2

<OCEP_CONNECTION_NAME>

This holds the sfdc connection name used in LEXI_OCEP_Consent_Import_Baseline pipeline

"ocep_connection2_dev"

-

Import the pipeline into your IDP environment, and then open it to ensure that the parameter values have been correctly set.

-

Create a new connection for CLS and Consent by using the Data pipeline maintenance feature.

-

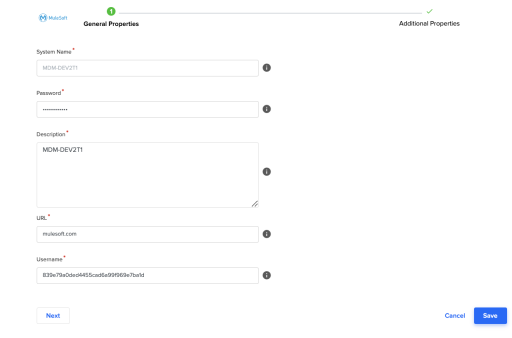

Select Mulesoft Anypoint from the list of connections.

-

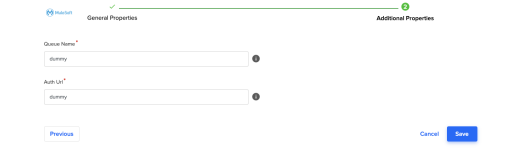

Provide the CLS API username and password as mentioned below and click Next. Then, provide dummy values and save. This connection name will be used as part of the CLS API metadata in the LEXI_API_CONFIG table.

-

Create a record in LEXI_API_CONFIG table. This table has the URL of the CLS API in LEXI environment. below is sample insert statement, user needs to provide right value and insert it.

INSERT INTO LEXI_API_CONFIG(lexi_api_name, lexi_api_url, lexi_environment_name, lexi_region, lexi_tenant_id,LEXI_CONNECTION_NAME)

VALUES ('CLS', '<https://iqvia-ts-cls-export-1-1-d6-u.us-e1.cloudhub.io/api/>', '<lexi-product-dev-us-003>', '<CompanyExternalId/CountryCode>', '<TenantID>' ,'<LEXI_CONNECTION_NAME>');