Anypoint MQ HTTP Connector Plugin

HTTP Connector Plugin is a batch plugin which can be used to download data from IDP (Snowflake Database) and send to HTTP endpoints like MuleSoft AnyPoint MQ, Amazon Simple Queue Service, Kafka etc in batches. The current release is tested and deployed in connection with MuleSoft AnyPoint MQ and the below document describes how to configure and run the same from IDP.

Prerequisite

The following are the prerequisite conditions for Anypoint MQ HTTP Connector Plugin:

-

Create AnyPoint Connection or Use an existing one.

-

Create the source tables/views/materialized views from where the connector plugin can read the data. Source needs to have a field call JSON ( → with VARIANT or VARCHAR data type and can contain non JSON structure as well) and a primary generated key.

Steps

Follow the below steps:

-

Create a new Task Group or add the Connector Plugin as part of an existing Task Group.

-

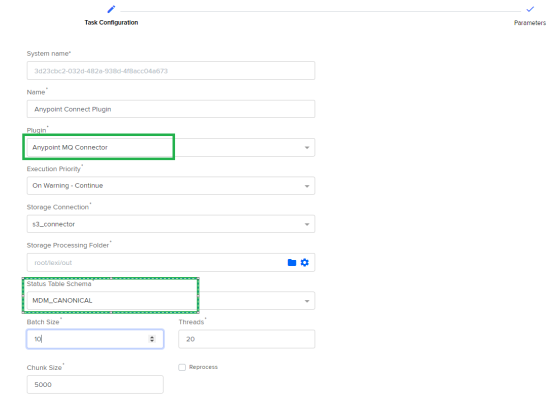

Add task under the task group created. While creating the task choose:

-

Plugin: Anypoint MQ Connector

-

Storage Processing Folder: S3 Folder to be used to process the data through the plugin.

-

Status Table Schema: The schema where the status table is created after the processing of the data.

-

Batch Size: For Anypoint, the default batch size is 10. If batch size is set > 10, then the plugin can default to 10 and process the data.

-

Threads: Parallel Processing attributes. The number of threads measured to be set according to the needs, 20 being optimal one.

-

Chunk Size: Amount of data, read by the plugin in parallel to be sent to Anypoint MQ. 5000 is optimal one.

-

Reprocess: Plugin has inbuilt mechanism for reprocessing, if chosen reprocess for any client failure.

-

-

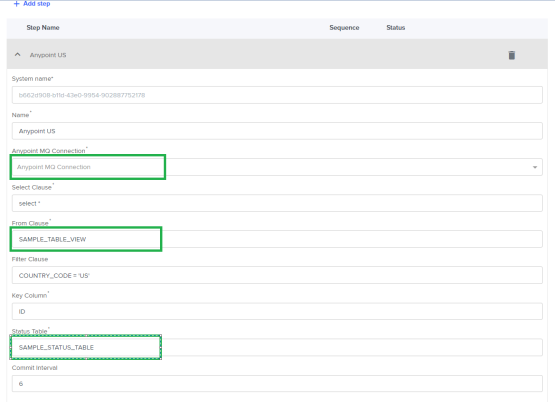

Save the task view and create Steps. While adding the step, choose the below. Multiple steps can bee added to send data to multiple Anypoint MQ:

-

Anypoint MQ Connection: Choose the Anypoint Connection name from the dropdown (These connections are already created as part of the prerequisite steps).

-

Select Clause: Default is select *.

-

From Clause: Provide the name of the table/view which contains the key Column and the JSON field.

-

Filter Clause: If any filter logic needs to be applied on the view or table to process the data from.

-

Key Column: The unique key field in the table or the view which is used to share the status on.

-

Status Table: Name of the status table where the transmission status for each rows from the table/view.

-

Commit Interval: Default commit interval time set, to write the status messages while the plugin processing is still in process.

-

Troubleshooting

-

For any 404 errors in the status table, make sure the Anypoint MQ connections are valid.

-

If the size of the data (JSON field) from the table/view is large, reduce the number of Batch Size from 10.

-

Plugin doesn't check for the validity of the key column provided, so create as a RANDOM or UUID field if needed for a single batch.